SEE BELOW FOR RESEARCH ARTICLES SUPPORTED BY THIS TRIPODS PROJECT.

Machine Learning

Research at the institute focuses on mathematical techniques related to machine learning (ML). In particular, researchers involved with the institute are interested in open questions related to the training of deep neural networks, which have revolutionized the ability of computers to perform perceptual tasks such as image classification, speech recognition, machine translation, and many others.

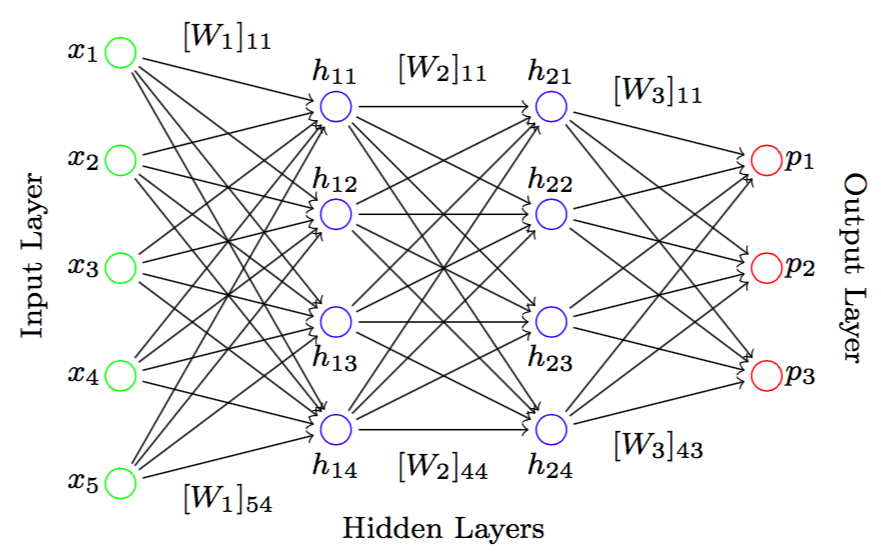

Inspired by models in neuroscience, deep neural networks can be understood as computational graphs that take an input \(x\) and produce an output \(p\). See the figure below. Deep learning is the process of determining weights (represented by the \(W\) values in the figure below) for the computational graph so that the output \(p\) matches the appropriate output \(y\) corresponding to \(x\).

This process for determining weights for the graph is done through mathematical optimization. Given a certain initial set of weights \(W\), an optimization algorithm sequentially perturbs the weights based on given input-output pairs to produce a better set of weights that better predict outputs based on arbitrary inputs. Researchers at the Institute for Optimization and Learning are experts in the use of deep neural networks and the optimization methods required to train them.

Optimization

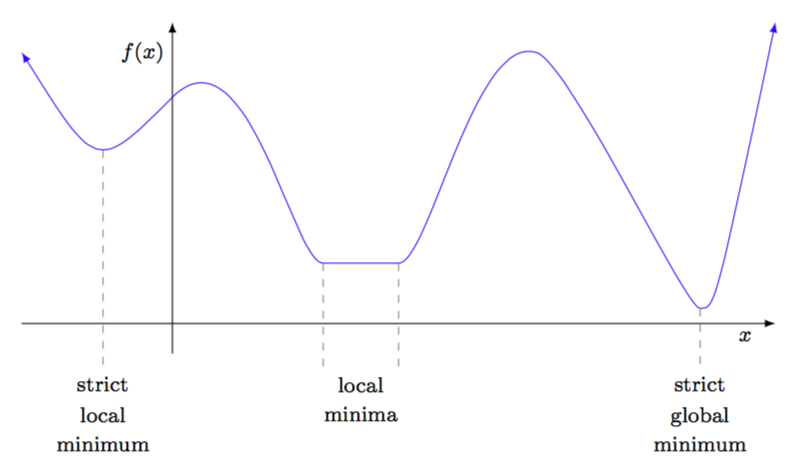

The optimization problems that arise in deep learning are extremely difficult to solve. In particular, these problems are challenging due to the nonconvexity of the functions that one aims to minimize. It is difficult to minimize a nonconvex function due to the presence of “local” minima (that are not “global” minima), “saddle points,” and other factors. See the figure below.

The function in this figure does not completely represent the function that one aims to minimize in a deep learning setting. In fact, a challenge in deep learning is that researchers do not entirely understand the “landscape” of the function when training a deep neural network. One of the goals of researchers at the institute is to understand the properties of these functions and design and analyze new optimization methods for finding their minimizers.

Tutorials

For more information on optimization methods for machine learning, please see the tutorials below:

- “Optimization Methods for Supervised Machine Learning: From Linear Models to Deep Learning,” by Frank E. Curtis and Katya Scheinberg

- “Optimization Methods for Large-Scale Machine Learning,” by Leon Bottou, Frank E. Curtis, and Jorge Nocedal

Publications

[bibtex file=bibsources/tripods_fec.bib,bibsources/tripods_francesco.bib,bibsources/tripods_katya.bib,bibsources/tripods_martin.bib sort=firstauthor order=asc]